옵션

--scale-down-delay-after-add

--scale-down-delay-after-delete

--scale-down-delay-after-failure

--scale-down-delay-after-add=5m

개요

ClusterAutoScaler 이란 ?

ClusterAutoScaler은 WorkerNode의 수를 조절하는 기능이다.

HPA가 POD의 Resource 사용량을 감지하다가 일정 값 이상을 사용하면 POD 개수를 늘려준다.

HPA가 POD를 계속 늘리다가 WorkerNode의 Resource가 부족해서 POD가 배포되지 않고 Pending 상태로 빠진다.

CA는 resource 부족으로 Pending 상태의 POD를 감지해서 WorkerNode를 증설해준다.

특이사항 : 같은 역할하는 오픈소스 Karpenter 참조.

1-1. policy yaml 생성

vi cluster-autoscaler-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"autoscaling:SetDesiredCapacity",

"autoscaling:TerminateInstanceInAutoScalingGroup"

],

"Resource": "*",

"Condition": {

"StringEquals": {

"aws:ResourceTag/k8s.io/cluster-autoscaler/my-cluster": "owned"

}

}

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": [

"autoscaling:DescribeAutoScalingInstances",

"autoscaling:DescribeAutoScalingGroups",

"ec2:DescribeLaunchTemplateVersions",

"ec2:DescribeInstanceTypes",

"autoscaling:DescribeTags",

"autoscaling:DescribeLaunchConfigurations"

],

"Resource": "*"

}

]

}

1-2. policy 배포

aws iam create-policy \

--policy-name AmazonEKSClusterAutoscalerPolicy \

--policy-document file://cluster-autoscaler-policy.json

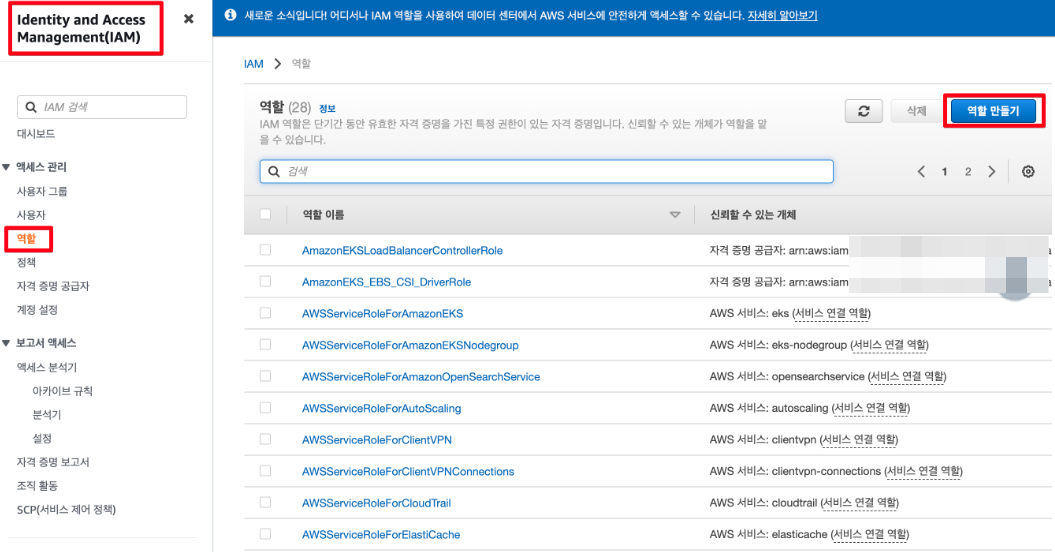

2-1. Role 생성

1. https://console.aws.amazon.com/iam/에서 IAM 콘솔을 엽니다.

2. 왼쪽 탐색 창에서 역할(Roles)을 선택합니다. 그런 다음 역할 생성(Create role)을 선택합니다.

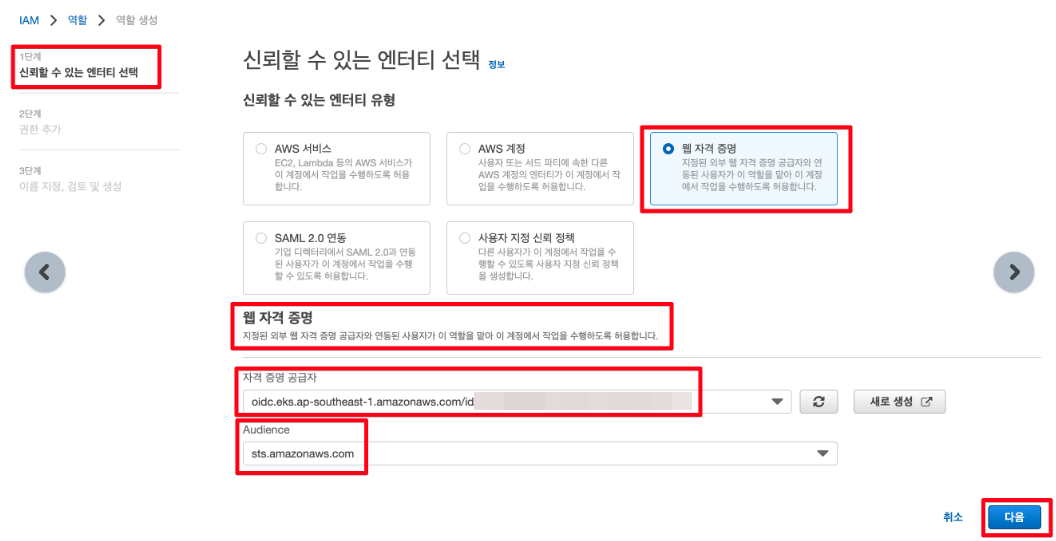

3. 신뢰할 수 있는 엔터티 유형(Trusted entity type) 섹션에서 웹 자격 증명(Web identity)을 선택합니다.

웹 자격 증명(Web identity) 섹션에서:

4. 필터 정책(Filter policies) 상자에 AmazonEKSClusterAutoscalerPolicy를 입력합니다. 그런 다음 검색에 반환된 정책 이름 왼쪽에 있는 확인란을 선택합니다.

다음(Next)을 선택합니다.

5. 역할 이름(Role name)에 역할의 고유한 이름(예: AmazonEKSClusterAutoscalerRole)을 입력합니다.

설명(Description)에서 Amazon EKS - Cluster autoscaler role과 같은 설명 텍스트를 입력합니다.

역할 생성(Create role)을 선택합니다.

6. 역할을 생성한 후 편집할 수 있도록 콘솔에서 이 역할을 선택하여 엽니다.

신뢰 관계(Trust relationships) 탭을 선택한 후 신뢰 정책 편집(Edit trust policy)을 선택합니다.

""oidc.eks.ap-southeast-1.amazonaws.com/id/310E05BE09F95AEA8901F399DC327578:sub": "system:serviceaccount:kube-system:cluster-autoscaler"" 내용 추가

3-1. Cluster Autoscaler yaml 다운로드

curl -o cluster-autoscaler-autodiscover.yaml https://raw.githubusercontent.com/kubernetes/autoscaler/master/cluster-autoscaler/cloudprovider/aws/examples/cluster-autoscaler-autodiscover.yaml

3-2. Cluster Autoscaler yaml 수정

vi cluster-autoscaler-autodiscover.yaml

metadata:

annotations:

eks.amazonaws.com/role-arn: arn:aws:iam::xxx:role/AmazonEKSClusterAutoscalerRole

- --node-group-auto-discovery=asg:tag=k8s.io/cluster-autoscaler/enabled,k8s.io/cluster-autoscaler/prod-xxx-eks

3-3. Cluster Autoscaler 배포

kubectl apply -f cluster-autoscaler-autodiscover.yaml -n kube-system

serviceaccount/cluster-autoscaler created

clusterrole.rbac.authorization.k8s.io/cluster-autoscaler created

role.rbac.authorization.k8s.io/cluster-autoscaler created

clusterrolebinding.rbac.authorization.k8s.io/cluster-autoscaler created

rolebinding.rbac.authorization.k8s.io/cluster-autoscaler created

deployment.apps/cluster-autoscaler created

4-1. 서비스 계정에 주석을 설정

kubectl annotate serviceaccount cluster-autoscaler \

-n kube-system \

eks.amazonaws.com/role-arn=arn:aws:iam::ACCOUNT_ID:role/AmazonEKSClusterAutoscalerRole

4-2. deployment에 주석을 설정

kubectl patch deployment cluster-autoscaler \

-n kube-system \

-p '{"spec":{"template":{"metadata":{"annotations":{"cluster-autoscaler.kubernetes.io/safe-to-evict": "false"}}}}}'

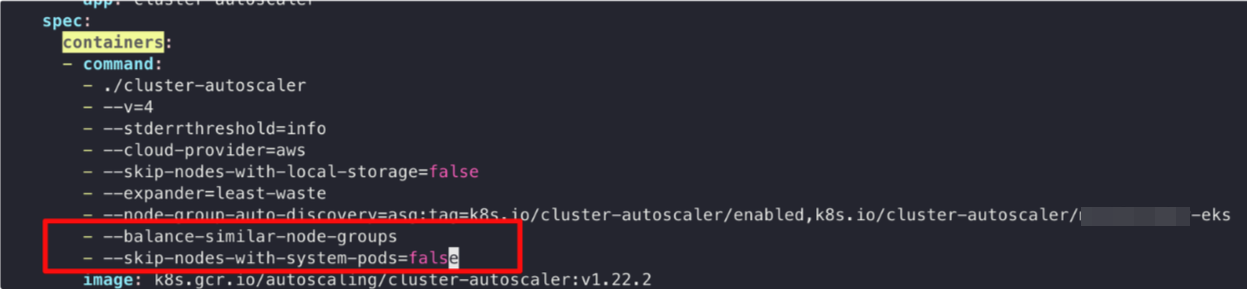

4-3. deployment에 옵션 설정

kubectl edit deploy cluster-autoscaler -n kube-system

4-4. deployment에 image 옵션 설정

kubectl edit deploy cluster-autoscaler -n kube-system

image: k8s.gcr.io/autoscaling/cluster-autoscaler:v1.22.2

5. Cluster Autoscaler 설치 확인

kubectl -n kube-system logs -f deployment.apps/cluster-autoscaler

aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='prod-xxx-eks']].[AutoScalingGroupName, MinSize,MaxSize,DesiredCapacity]" --output table

vi php-apache.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: php-apache

spec:

selector:

matchLabels:

run: php-apache

replicas: 1

template:

metadata:

labels:

run: php-apache

spec:

containers:

- name: php-apache

image: registry.k8s.io/hpa-example

ports:

- containerPort: 80

resources:

limits:

cpu: 500m

requests:

cpu: 200m

nodeSelector:

nodegroupname: test-ng

---

apiVersion: v1

kind: Service

metadata:

name: php-apache

labels:

run: php-apache

spec:

ports:

- port: 80

selector:

run: php-apache

---

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: php-apache

namespace: dw

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

minReplicas: 1

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50

kubectl apply -f php-apache.yaml -n dw

watch "kubectl get pod,hpa -n dw; echo; echo ; kubectl top pod -n dw; echo ;echo; aws autoscaling describe-auto-scaling-groups --query \"AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='prod-xxx-eks']].[AutoScalingGroupName, MinSize,MaxSize,DesiredCapacity]\" --output table"

kubectl run -i -n dw \

--tty load-generator \

--rm --image=busybox \

--restart=Never \

-- /bin/sh -c "while sleep 0.01; do wget -q -O- http://php-apache; done"

kubectl get pod -n dw

pod/php-apache-5f8766dbcb-59jwx 0/1 Pending 0 9s

pod/php-apache-5f8766dbcb-5mzsv 0/1 Pending 0 9s

kubectl describe pod/php-apache-5f8766dbcb-59jwx -n dw

Type Reason Age From Message

---- ------ ---- ---- -------

Normal TriggeredScaleUp 3m11s cluster-autoscaler pod triggered scale-up: [{prod-xxx-eks-test-node-group-xxx 2->3 (max: 4)}]

Warning FailedScheduling 2m12s (x2 over 3m21s) default-scheduler 0/8 nodes are available: 2 Insufficient cpu, 6 node(s) didn't match Pod's node affinity/selector.kubectl get ev -n dw |grep pod/php-apache-5f8766dbcb-59jwx

13m Warning FailedScheduling pod/php-apache-5f8766dbcb-59jwx 0/8 nodes are available: 2 Insufficient cpu, 6 node(s) didn't match Pod's node affinity/selector.

14m Normal TriggeredScaleUp pod/php-apache-5f8766dbcb-59jwx pod triggered scale-up: [{prod-xxx-eks-test-node-group-xxx 2->3 (max: 4)}]

aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='prod-xxx-eks']].[AutoScalingGroupName, MinSize,MaxSize,DesiredCapacity]" --output table

----------------------------------------------------------------------------------------------

| DescribeAutoScalingGroups |

+-----------------------------------------------------------------------------+----+----+----+

| eks-prod-xxx-eks-app-node-group-xxx | 3 | 3 | 3 |

| eks-prod-xxx-eks-infra-node-group-xxx | 3 | 3 | 3 |

| eks-prod-xxx-eks-test-node-group-xxx | 2 | 4 | 3 |

+-----------------------------------------------------------------------------+----+----+----+

kubectl get no --show-labels |grep test-ng |awk '{print $1,$2,$3,$4}'

ip-xxx.ap-southeast-1.compute.internal Ready <none> 15m

ip-xxx.ap-southeast-1.compute.internal Ready <none> 3h31m

ip-xxx.ap-southeast-1.compute.internal Ready <none> 4h9m

kubectl describe pod/php-apache-5f8766dbcb-59jwx -n dw

Normal SandboxChanged 42s (x12 over 72s) kubelet Pod sandbox changed, it will be killed and re-created.

Normal Pulling 41s kubelet Pulling image "registry.k8s.io/hpa-example"kubectl get ev -n dw |grep pod/php-apache-5f8766dbcb-59jwx

11m Normal Pulling pod/php-apache-5f8766dbcb-59jwx Pulling image "registry.k8s.io/hpa-example"

WorkerNode 증가

WorkerNode 축소

node 이벤트 정보

kubectl describe node ip-xxx.ap-southeast-1.compute.internal

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScaleDown 2s cluster-autoscaler node removed by cluster autoscaler

Cluster Autoscaler 로그 정보

kubectl logs cluster-autoscaler-7f467f7c8b-6nmjf -n kube-system

I1014 10:01:52.850847 1 scale_down.go:448] Node ip-10-223-69-47.ap-southeast-1.compute.internal - cpu utilization 0.164894

I1014 10:01:52.851457 1 cluster.go:345] Pod dw/php-apache-5f8766dbcb-g7r26 can be moved to ip-10-223-69-47.ap-southeast-1.compute.internal

I1014 10:01:52.851587 1 static_autoscaler.go:510] ip-10-223-69-47.ap-southeast-1.compute.internal is unneeded since 2022-10-14 09:51:47.768395971 +0000 UTC m=+24420.518339893 duration 10m4.880789629s

I1014 10:01:52.851799 1 scale_down.go:829] ip-10-223-69-47.ap-southeast-1.compute.internal was unneeded for 10m4.880789629s

I1014 10:01:52.851858 1 scale_down.go:1104] Scale-down: removing empty node ip-10-223-69-47.ap-southeast-1.compute.internal

I1014 10:01:52.852216 1 event_sink_logging_wrapper.go:48] Event(v1.ObjectReference{Kind:"ConfigMap", Namespace:"kube-system", Name:"cluster-autoscaler-status", UID:"d1fef2a9-fce5-45de-83a7-a8a2c2cf133d", APIVersion:"v1", ResourceVersion:"28608753", FieldPath:""}): type: 'Normal' reason: 'ScaleDownEmpty' Scale-down: removing empty node ip-10-223-69-47.ap-southeast-1.compute.internal

I1014 10:01:52.878177 1 delete.go:103] Successfully added ToBeDeletedTaint on node ip-10-223-69-47.ap-southeast-1.compute.internal

I1014 10:01:53.226093 1 event_sink_logging_wrapper.go:48] Event(v1.ObjectReference{Kind:"Node", Namespace:"", Name:"ip-10-223-69-47.ap-southeast-1.compute.internal", UID:"67a630b6-eeae-400d-ae7e-3729132ce21d", APIVersion:"v1", ResourceVersion:"28607260", FieldPath:""}): type: 'Normal' reason: 'ScaleDown' node removed by cluster autoscaler

옵션

--scale-down-delay-after-add

--scale-down-delay-after-delete

--scale-down-delay-after-failure

--scale-down-delay-after-add=5m

| [ istio ] istio 이란? (0) | 2023.04.13 |

|---|---|

| [ EKS ] karpenter (0) | 2023.04.06 |

| [ EKS ] VPA (0) | 2023.04.06 |

| [ EKS ] HPA (0) | 2023.04.04 |

| [ EKS ] Secret, ConfigMap 설정 (0) | 2023.04.04 |